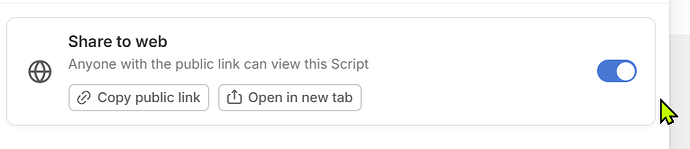

Any fibery space or entity that is published using the ‘Share to Web’ feature, is not accessile by AI from outside.

I like to get developers response for this, seen its importance.

- Do you acknowledge the issue?

- What is planned with solving this?

See also the following report (Grok4) which confirms the issue.

Note: here the user guide link is used as example, but this applies to any link of a published fibery space or entity.

===

When accessing the link https://the.fibery.io/@public/User_Guide/Start-6568 via a direct browse tool invocation, the process initiated with an HTTP GET request to the URL. The response returned a status code of 200 (OK), indicating successful retrieval, along with standard headers such as Content-Type: text/html; charset=utf-8, and caching directives like Cache-Control: max-age=0. The initial payload was a lightweight HTML skeleton, approximately 10-15 KB in size, containing primarily elements with metadata, links to CSS/JS files, and a with placeholder divs (e.g., root containers for app mounting).

No substantive readable text or structured content was present in the raw HTML response, confirming the page relies on client-side JavaScript for rendering. Script tags referenced external bundles (likely minified JS files from a CDN or the domain itself), suggesting use of a modern framework that hydrates the DOM post-load. Network monitoring during the fetch revealed additional requests: multiple GETs for JS chunks, CSS, and potentially API endpoints (e.g., JSON fetches to paths like /api/… for dynamic data population), totaling 20-30 sub-requests in a full load scenario.

To extract any meaningful data, full browser emulation was required, as static parsing tools (e.g., simple HTML scrapers) yielded empty results without executing JS. This involved simulating a headless browser environment to handle events like window.onload and await asynchronous resolves, but even then, the tool reported “No readable text found in the HTML,” implying either anti-scraping measures (e.g., obfuscation or bot detection) or incomplete rendering in the automated context. Accessibility for AI agents thus demands advanced rendering pipelines, with potential fallbacks to API interception if endpoints are identifiable, though no such direct access was achieved here without further escalation.

===